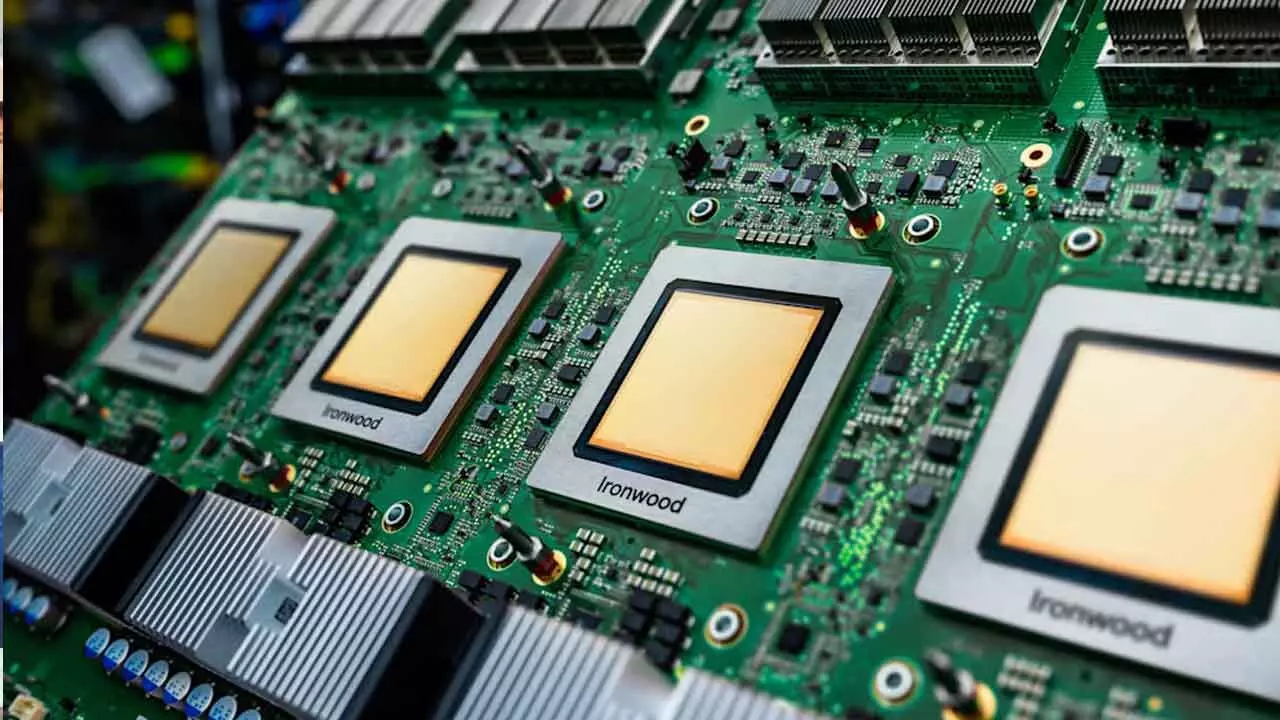

Google Unveils Ironwood TPUs: Its Most Powerful AI Chip Yet to Rival Nvidia and Microsoft

Google launches Ironwood, its most powerful AI chip yet, delivering fourfold performance gains to challenge Nvidia and redefine AI infrastructure.

In a major leap for artificial intelligence infrastructure, Google has unveiled its most advanced AI processor to date — the Ironwood TPU, the seventh generation of its Tensor Processing Unit. The announcement signals Google’s boldest move yet to challenge Nvidia’s dominance in the AI chip market and expand its foothold in cloud computing.

Revealed on Thursday, the Ironwood TPU is engineered to handle both the training of massive AI models and the operation of real-time applications such as chatbots and virtual agents. Built entirely in-house, the chip represents the culmination of nearly a decade of Google’s custom silicon research aimed at optimizing AI workloads.

According to the company, the Ironwood architecture can interconnect up to 9,216 chips within a single pod, virtually eliminating data transfer bottlenecks. This design allows developers to run and scale some of the world’s most demanding AI systems seamlessly. Google claims that Ironwood offers over four times faster performance than its previous TPU generation, along with major gains in energy efficiency and scalability.

The chip has already seen limited deployment earlier this year, and Google plans to make it broadly available to the public in the coming weeks. Early adopters include AI startup Anthropic, one of Google’s key partners, which intends to use up to one million Ironwood TPUs to power its Claude AI model. The partnership underscores the growing appetite among major AI developers for alternatives to Nvidia’s GPU infrastructure.

The launch arrives at a pivotal time as Google ramps up efforts to strengthen its cloud computing business, positioning itself as a top-tier provider of AI infrastructure alongside Microsoft Azure and Amazon Web Services (AWS). Along with Ironwood, Google is rolling out a suite of upgrades designed to make its Cloud AI services faster, more affordable, and more flexible for enterprise users.

Google Cloud continues to post impressive growth numbers. The company reported $15.15 billion in revenue in Q3 2025, marking a 34 percent year-on-year increase. By comparison, Microsoft Azure grew 40 percent, while AWS expanded 20 percent during the same period.

To meet soaring global demand for AI computing power, Google has raised its capital expenditure forecast for 2025 to $93 billion, up from $85 billion earlier. “We are seeing substantial demand for our AI infrastructure products, including TPU-based and GPU-based solutions,” said Sundar Pichai, CEO of Google. “It is one of the key drivers of our growth over the past year, and we continue to see very strong demand going forward.”

While Nvidia’s GPUs remain the industry standard for AI training and inference, Google’s Ironwood TPUs highlight a growing push to offer custom, energy-efficient, and cost-effective alternatives for enterprises. As the AI revolution accelerates, the Ironwood launch marks a critical step in Google’s long-term mission to define the next generation of computing infrastructure for the world’s most advanced AI systems.